HBMS: Multimodal Client Interface

Human Behavior Monitoring and Support

Human Behavior Monitoring and Support

If needed, the HBMS Support Engine provides assistance to a person based on matching the person’s observed actions with the knowledge base HCM. For transmitting assistive information to this person and for interaction purposes, HBMS has a multimodal user interface that works with different media types (audio, handheld, beamer, Laserpointer, light sources, etc.).

This interface is intended to be defined according to the MCA paradigm by introducing a domain specific modeling language; such language is currently under development.

The current HBMS prototype version supports handhelds and audio devices.

HBMS support client:

uses a multimodal DSML based interface

The kernel monitoring client is shared by both the HBMS admin and the HBMS caregiver interface of the kernel. It allows to monitor the events processed by the kernel, execute simulations of the sensor events, and perform administrative activities.

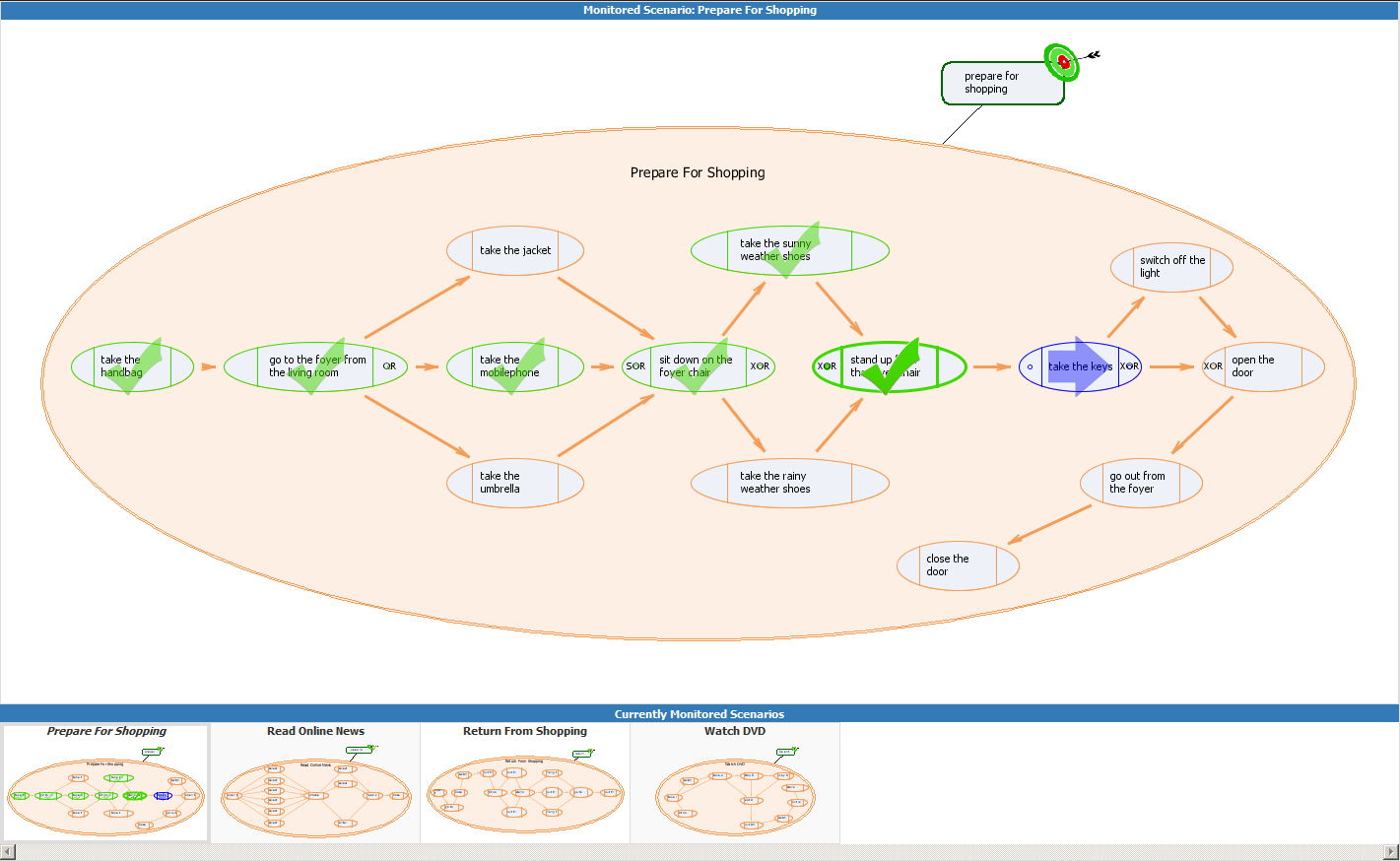

The main part of the monitoring client is a HBMS visualizer. It allows the user to see the current state of the matching session by

Kernel monitoring client and HBMS visualizer

Figure 1:

HBMS Visualizer in action

The user has just performed “stand up from the chair” operation after correctly performing “take the sunny weather shoes” and other preceding operations in the behavioral unit, the “take the keys” operation is shown as predicted.

The visualizer supports six different states of the operation element and three states of the goal element in behavioral unit models; in particular, these states (e.g. “correctly/wrongly matched as a session’s past operation”, “correctly/wrongly matched as a current operation”, “predicted”) are visualized by different color and thickness of the element’s outline.

The visualizer queries the state of the matching session several times per second and changes the display based on the obtained information. As a result, it is possible to see

HBMS Visualizer in detail

Nächste interaktive ULG-Vorstellung:

tba

Nehmen Sie an unsrem Meeting per Computer oder Smartphone teil.

https://global.gotomeeting.com/join/223661045

Zum ersten Mal bei GoToMeeting?

Hier können Sie eine Systemprüfung durchführen:

https://link.gotomeeting.com/system-check

![]()